Load Testing and Penetration Testing: The Importance of It

Background

Load testing is important to simulate concurrent requests being made, especially in a startup context when you still don't have that much of users. By doing load testing, we can make sure that our system is ready for more users in the future and enables us to optimize before that time comes.

Penetration testing is equally important. It helps us detect vulnerabilities within our system and fix them immediately. It ensures our system would be as secure as possible, which is important when creating a platform that is going to be used by hundreds or even thousands of users.

How to Load Test?

A very useful and intuitive tool I discovered to load test the backend is k6. k6 can simulate concurrent request being made by a lot of users to "load" our application as see whether our backend can handle the requests gracefully. I found this source really helpful for me to understand more about k6 and setting it up.

Firstly, we need to install k6. I use Docker for this:

docker pull grafana/k6

Next, we create a test file:

docker run --rm -i -v ${PWD}:/app -w /app grafana/k6 new load-test.spec.js

and then we update the created test file.

For example, I would like to test the get events endpoint, as the query involves quite a lot of joins, and SQL queries with joins tend to be more demanding.

// load test file

import http from 'k6/http';

import { sleep } from 'k6';

export const options = {

vus: 10,

stages: [

{ duration: '10s', target: 100 },

{ duration: '10s', target: 200 },

{ duration: '10s', target: 300 },

],

tags: {

environment: 'staging',

},

};

export default function () {

const url =

'https://eventable-be-staging.up.railway.app/api/events?page=1&limit=24&sortOrder=asc';

http.get(url);

sleep(1);

}

With this test file, we:

- Set the initial users (k6 calls it as virtual users) to 10. This means initially, we're simulating requests made by 10 users at the same time.

- In the first 10 seconds, the amount of users will increase to 100. After the next 10 seconds, the amount will increase to 200. After the next 10 second, it will increase to 300.

- We set the get events endpoint to be load-tested.

Once we do, we run it (I use the Windows method here, as I'm load testing from a Windows machine):

cat load-test.spec.js | docker run --rm -i grafana/k6 run -

Here are the results that I receive:

/\ |‾‾| /‾‾/ /‾‾/

/\ / \ | |/ / / /

/ \/ \ | ( / ‾‾\

/ \ | |\ \ | (‾) |

/ __________ \ |__| \__\ \_____/ .io

execution: local

script: -

output: -

scenarios: (100.00%) 1 scenario, 300 max VUs, 1m0s max duration (incl. graceful stop):

* default: Up to 300 looping VUs for 30s over 3 stages (gracefulRampDown: 30s, gracefulStop: 30s)

running (0m01.0s), 018/300 VUs, 0 complete and 0 interrupted iterations

default [ 3% ] 018/300 VUs 01.0s/30.0s

running (0m02.0s), 027/300 VUs, 13 complete and 0 interrupted iterations

default [ 7% ] 027/300 VUs 02.0s/30.0s

...

running (0m29.0s), 289/300 VUs, 2870 complete and 0 interrupted iterations

default [ 97% ] 289/300 VUs 29.0s/30.0s

running (0m30.0s), 299/300 VUs, 3127 complete and 0 interrupted iterations

default [ 100% ] 299/300 VUs 30.0s/30.0s

running (0m31.0s), 095/300 VUs, 3332 complete and 0 interrupted iterations

default ↓ [ 100% ] 299/300 VUs 30s

data_received..................: 47 MB 1.5 MB/s

data_sent......................: 603 kB 19 kB/s

http_req_blocked...............: avg=14.75ms min=157ns med=629ns max=1.16s p(90)=1.15µs p(95)=138.4ms

http_req_connecting............: avg=320.08µs min=0s med=0s max=78.31ms p(90)=0s p(95)=2.49ms

http_req_duration..............: avg=374.65ms min=202.43ms med=388.79ms max=1.92s p(90)=455.14ms p(95)=508.04ms

{ expected_response:true }...: avg=400.11ms min=258.16ms med=395.19ms max=1.92s p(90)=460.48ms p(95)=518.19ms

http_req_failed................: 19.11% ✓ 655 ✗ 2772

http_req_receiving.............: avg=104.82ms min=15.66µs med=143.56ms max=1.42s p(90)=159.82ms p(95)=165.39ms

http_req_sending...............: avg=117.32µs min=14.1µs med=101.17µs max=7.72ms p(90)=174.25µs p(95)=216.29µs

http_req_tls_handshaking.......: avg=13.94ms min=0s med=0s max=1.16s p(90)=0s p(95)=135.37ms

http_req_waiting...............: avg=269.71ms min=202.17ms med=249.63ms max=1.76s p(90)=327.52ms p(95)=384.54ms

http_reqs......................: 3427 109.453282/s

iteration_duration.............: avg=1.39s min=1.2s med=1.39s max=2.92s p(90)=1.51s p(95)=1.55s

iterations.....................: 3427 109.453282/s

vus............................: 95 min=18 max=299

vus_max........................: 300 min=300 max=300

running (0m31.3s), 000/300 VUs, 3427 complete and 0 interrupted iterations

default ✓ [ 100% ] 000/300 VUs 30s

Notice these lines:

http_req_duration..............: avg=374.65ms min=202.43ms med=388.79ms max=1.92s p(90)=455.14ms p(95)=508.04ms

{ expected_response:true }...: avg=400.11ms min=258.16ms med=395.19ms max=1.92s p(90)=460.48ms p(95)=518.19ms

p(95)=508.04ms means that 95% of the request made takes around 508.04 ms.

We can adjust the amount of virtual users, set up different test stages, and test other endpoints to test a variety of scenarios. I think that it makes k6 really flexible as a load testing tool.

How to Do Penetration Testing?

A tool I find really useful for this task is Burp Suite. This helps us act as an attacker that finds vulnerabilities in our app. This is to test our app security.

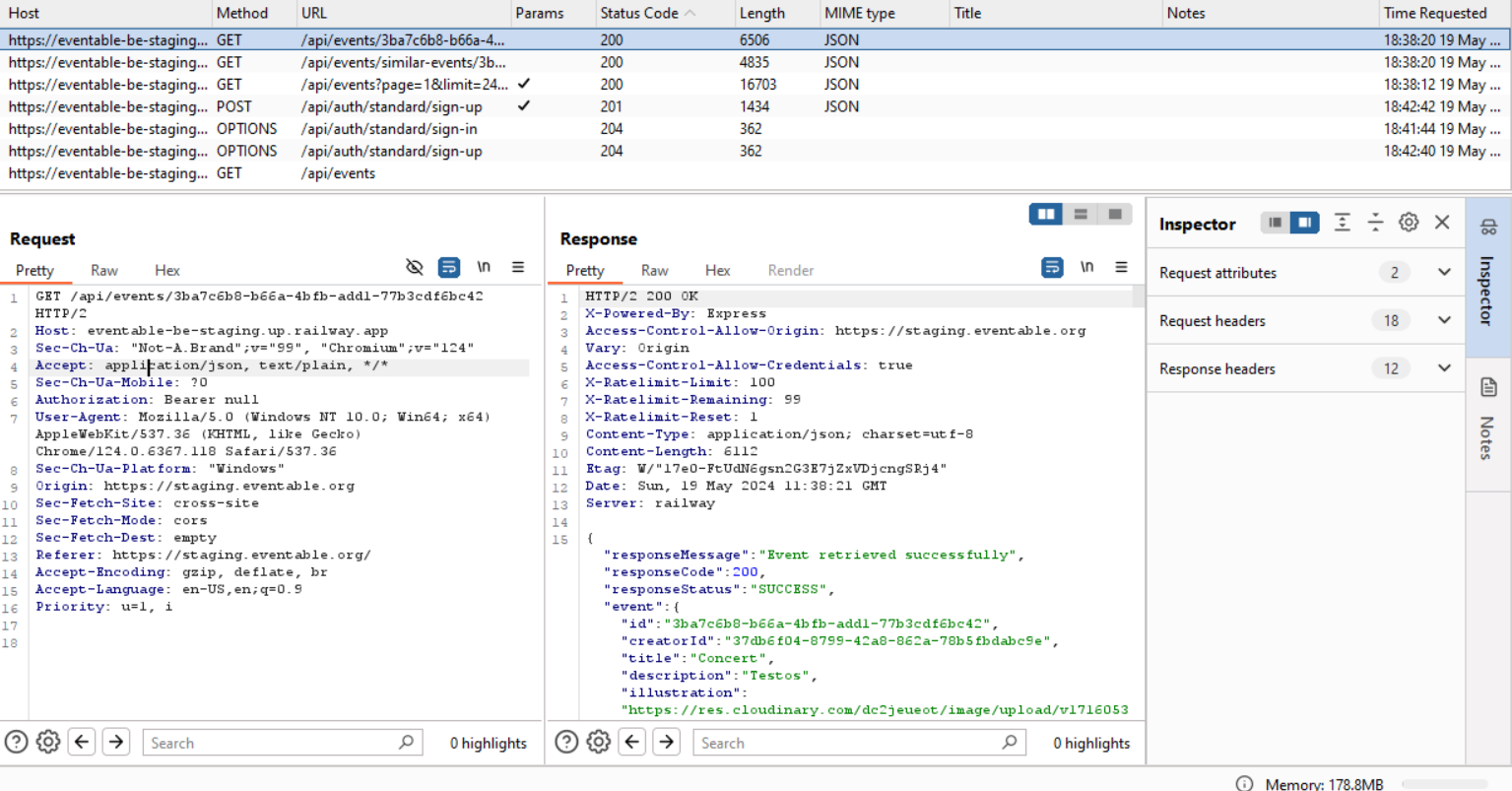

We can see the endpoints being hit on interacting with the frontend (I've filtered the log to show only the backend part being hit):

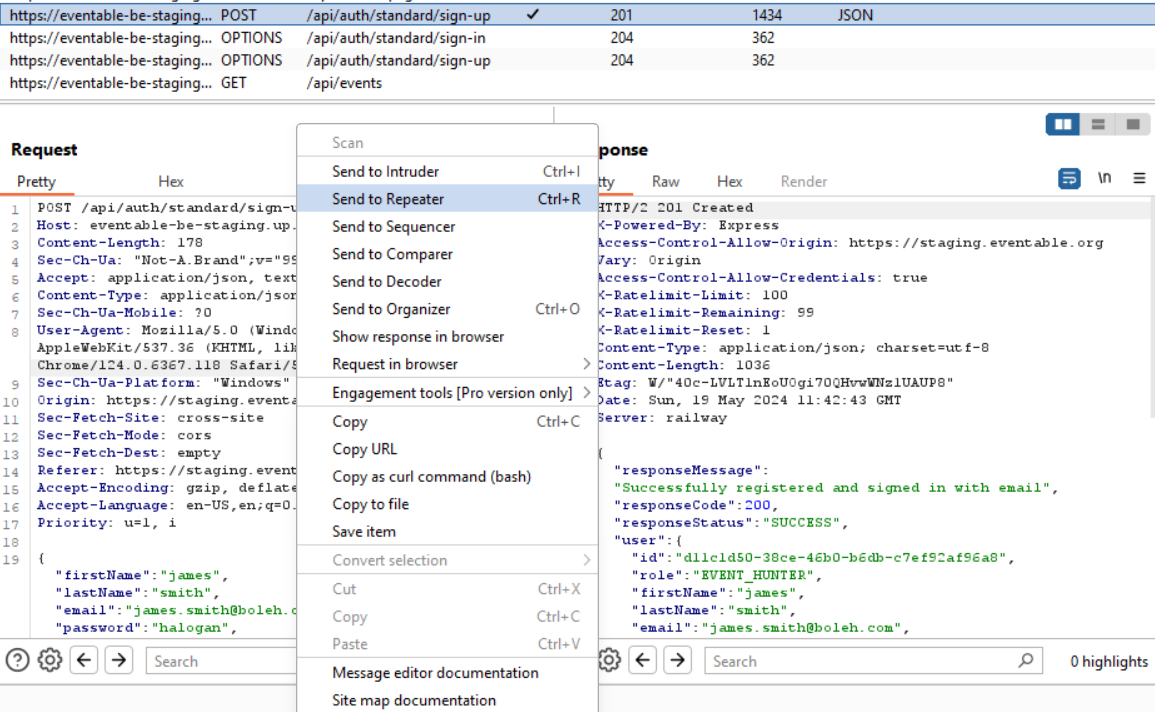

We can then choose an endpoint to inspect and send to the Repeater (basically Repeater is a place where we can repeat or modify a request):

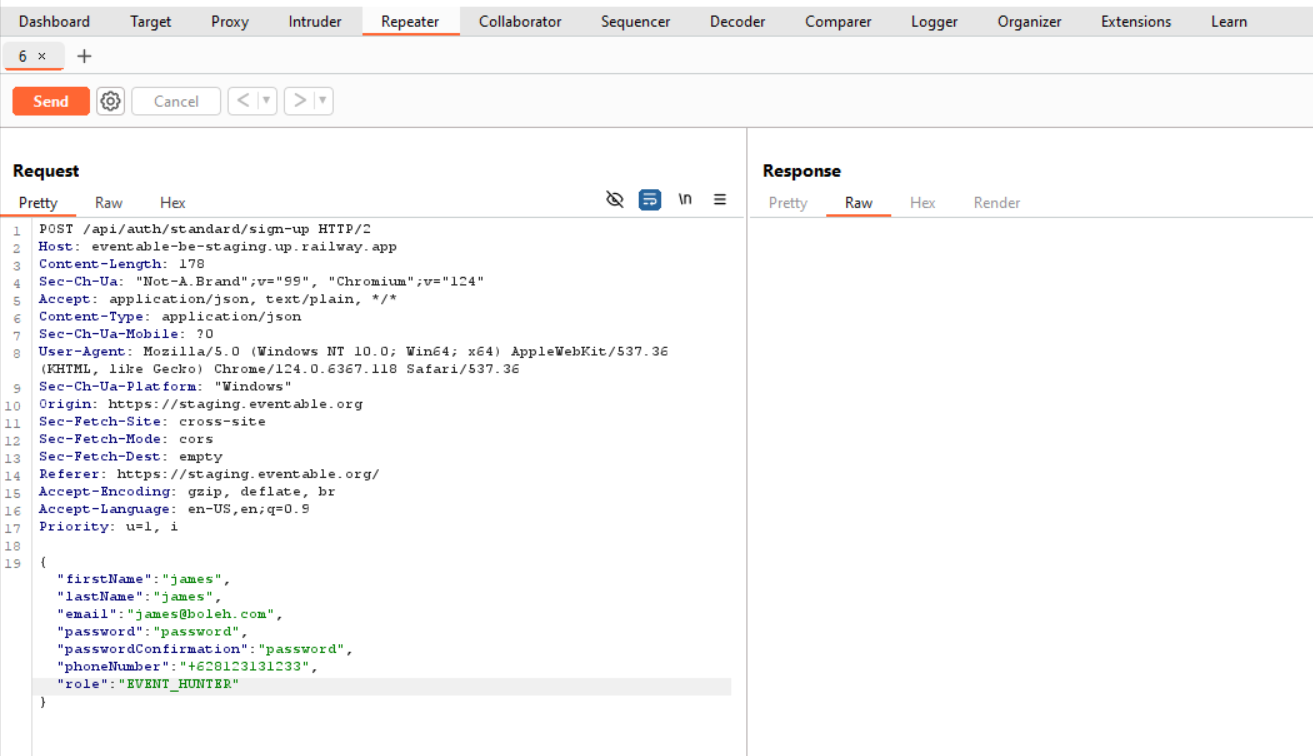

We can then see the previous request being made:

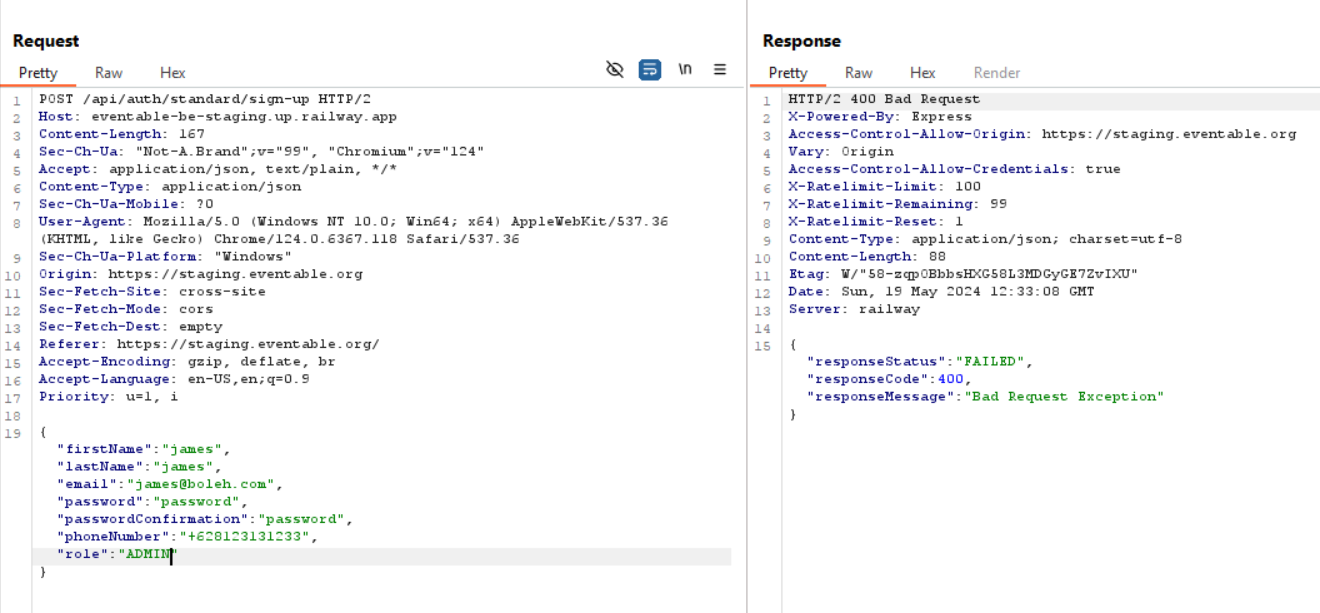

and try to find some vulnerability. As an example, I try to register a user with a role of ADMIN:

As we can see, the request is rejected.

We can then check other endpoints to check for vulnerabilities. Should there be any, we can then handle those issues to dismiss the vulnerabilites detected.

Conclusion

k6 is very useful for load testing our application. Burp Suite is also really important to detect security vulnerabilites in our application and fix it.